-

11 days to WNS3 2012

If you're not aware already, the 4th International Workshop on NS-3 (WNS3) 2012 is just around the corner. We're almost done with the organising and have a very interesting lineup of presentations. Tom Henderson and Mathieu Lacage will be giving keynote talks. We then have 12 full paper presentations spread across the day, and a shared poster session with the Omnet++ workshop for which we have 8 posters/demo submissions from our side. Have a look at the final program here.If you're an ns-3 user, don't miss this chance to share ideas and learn more about what researchers from around the globe are upto with the project. Don't forget, there's also the developers' meeting the next day. Just add yourself to the wiki if you're interesting in attending in person or remotely.

Now if only I can find a damn youth hostel in Desenzano...

-

tcp_probe no workey?

tcp_probe is a very handy utility in Linux to observe TCP flows. Implemented as a Linux kernel module, all you have to do is:$: modprobe tcp_probe [port=]

$: cat /proc/net/tcpprobe > logfile.out

...and you'll get clear statistics about whatever TCP flows go through 'port' (and of all TCP flows if you specify the port as 0).

Earlier today, I ran into a situation where tcp_probe would stop logging flows after a couple of seconds, and it always seemed to be around the 10th second in an experiment I was trying (which involved iperf-ing over a wireless link). Some quick searches made it clear that others were encountering it as well, but no one really had a solution for them. Odd.

And what do you do when Google can't help you find the answer? You look through the source code of course!

Within a few seconds of going through tcp_probe.c, I had my answer before me. Have a look at lines 47-49 and you'll know what was wrong in my case.

static int full __read_mostly;

MODULE_PARM_DESC(full, "Full log (1=every ack packet received, 0=only cwnd changes)");

module_param(full, int, 0);

In short, I was on a wireless channel without any nodes apart from my wireless interface and the access point, and my congestion window size was getting maxed out around the 10th second. Since tcpprobe by default logs a packet only if the congestion window size changes, I couldn't see any more packets of the flow I was looking at in /proc/net/tcpprobe.

So the solution?

$: modprobe tcp_probe <args> full=1

Note that you might want to look at the log buffer size parameter (bufsize) as well, because tcp_probe happily ignores packets once your log buffer is filled.

-

Fun with TCP CUBIC

I've been working with the folks at Deutsche Telekom Laboratories for my Masters thesis, where I'm dealing with software defined networking, wireless networks, and unicorns. It feels nice to be in a place where people understand both distributed systems AND networks (I still don't understand why there isn't an overlap there, but heck). From discussions on rate adaptation around my desk, and all the way up to distributed state consistency around the coffee machine, this place has it all. I'm having a gala time here worrying about the registers on a wireless card, wading through the zillion 802.11 amendments, hacking device drivers, and pretty much analysing interactions at every layer of the network stack.My work flow has mostly involved taking measurements to make sure that the problem I'm trying to solve is indeed a problem, trying to reason about the resulting measurements in terms of protocol behaviour/interleaving, and exploring what I can do to improve these measurements in a manner that is practical. As part of the work however, I needed some baseline measurements for TCP behaviour so that at the end of my thesis, I can say something like, "See? After what I did, we now have MOAR TCP AWESUM!!111"

Of course, TCP and wireless are two things that don't really get along well. TCP sees packet loss and delays as "congestion", and depending on the congestion avoidance algorithm, will back down upon hitting such a situation. However, wireless networks suck (and will continue to suck). You can make it suck less probably, but it'll always stay above the suck-thresh. Interference in the physical channel will always be there (like memories of The Phantom Menace, which will haunt us forever). Furthermore, if there's another station on the same channel communicating over a lower bit rate, your station's performance can be degraded real bad (known as the 802.11 performance anomaly). This means that Murphy in the physical layer (only your first hop in most cases!), can end up causing TCP to believe that there is congestion at any of the many links that lead to the destination, and actually back down.

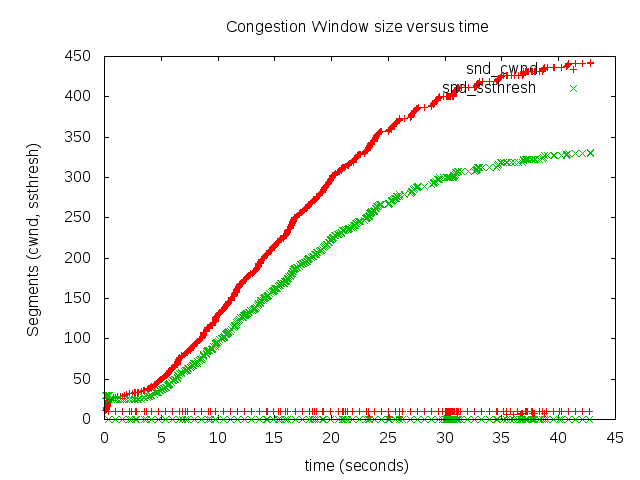

Linux uses TCP CUBIC as the default congestion control algorithm since kernel version 2.6.19. Without going into details of how the different TCP thresholds work in CUBIC, let's look at how its congestion window (CWND) curve looks like.

The above is a measurement of the CWND size on a per packet basis, for a flow generated using iperf. The test network comprised of my laptop running the iperf client with an access point one hop away, bridging all traffic to a server behind it via a switched LAN. I'm sharing a wireless channel with a whole bunch of other nodes. The red line is the CWND size, and the green line is the slow-start threshold (ssthresh) value. As for some quick TCP background, the red line indicates how many bytes TCP is willing to keep outstanding, and the green line indicates the threshold at which TCP says, "Ok, I think I should not get too greedy now, and will back-out if I feel the network is congested".

The graph above is a typical TCP CUBIC graph. When the congestion window (red) is less than the ssthresh value (green), the CWND increases in a concave manner (really fast). This can be seen by the near vertical spikes around seconds 3, 9, 35, and 37. When the CWND value is higher than the ssthresh value, TCP CUBIC plays safe, and tries to increase very slowly. For instance, look at how slowly the stretch between 22 and 30 is growing.

As you can see, TCP is detecting congestion multiple times, thanks to a noisy channel, and adjusts itself accordingly.

Next, we look at the curve in a channel where there is no contention at all (that is, no else on the channel except my wireless interface, and the access point I'm talking to).

Clearly there is no congestion here. The CWND value crosses the slow-start threshold very early (around the 1st second), plateaus since that point, and then goes convex around the 5th second. It then keeps probing for more bandwidth, finds it, and increases the window size steadily over time.

-

Towards a Scalable and Highly Available HDFS Namenode

After 3 months of intense hacking, I'm pleased to be writing about a little something I worked on for a project course here at KTH.The premise

So we're all familiar with Hadoop, right? It's the little yellow elephant that provides an excellent platform for distributed computing, which is seeing rapid adoption by the industry, and involvement from major players like Yahoo!, Facebook and recently, Microsoft. Well, Hadoop and friends use the Hadoop Distributed File System (HDFS) as their underlying storage layer. Given the kind of jobs that are expected to run on top of it, HDFS is designed to store large files, and is optimised for throughput as opposed to latency.

HDFS is a single-master-server based distributed file system. Architecturally speaking, HDFS comprises of three important entities:

- Clients, who read/write files from/to the filesystem.

- Datanodes, which actually store the data (blocks) associated with the files.

- The Namenode, which is a central server that stores all the metadata associated with the files, and blocks.

This division between metadata storage and data storage is important, because typical use cases of HDFS are data intensive, and not metadata intensive. That's fine, but the problem is, if the Namenode crashes, the entire file system becomes inoperable because clients and Datanodes still need the metadata to do anything useful. Furthermore, since the Namenode maintains all the metadata only in memory, the number of files you can store on the filesystem is directly proportional to the amount of RAM the Namenode has. As if that's not enough, the Namenode will be completely saturated under write intensive workloads, and will be unable to respond to even simple client side queries like "ls". Have a look at Shvachko's paper which describes these problems at great length and depth, on which we've based our work.

Long story short, the needs of the hour are:

- High availability for the Namenode, i.e, no single point of failure.

- Horizontal scalability for the Namenode, i.e, to handle heavier loads, one would need to only add more Namenodes to the system than having to upgrade a single Namenode's hardware.

Our solution

In order to recover from crashes, the Namenode maintains a journal of all changes that it makes to the metadata. This pretty much involves logging every operation made to disk, and there is quite a huge piece of code related to this as well. However, the database community has been doing journaling, checkpointing and replicated storage since quite a while. So if you haven't guessed our solution yet, here it is:

"Move all of the Namenode's metadata storage into an in-memory, replicated, share-nothing distributed database."

In short, Namenodes themselves are reduced to a stateless frontend to the database, and fetch state into memory only when required. This comes with the added advantage of being able to have multiple stateless Namenodes for the same filesystem namespace. We chose MySQL Cluster as our database because of its wide spread use and stability. So for the filesystem to scale to a larger number of files, one needs to add more MySQL Cluster Datanodes, thus moving the bottleneck from the Namenode's RAM to the DB's storage capacity. For the filesystem to handle heavier workloads, one needs to add only more Namenode machines and divide the load amongst them. Another interesting aspect is that if a single Namenode machine has to reboot, it needn't fetch any state into memory and will be ready for action within a few seconds (although it still has to sync with Datanodes). Another advantage of our design is that the modifications will not affect the clients or Datanodes in anyway, except that we might need to find a way to divide the load among the Namenodes.

How we did it

We first dissected all the internal protocols being used in HDFS, i.e, the client-Namenode, Namenode-Datanode, and client-Datanode protocols. Next, we stripped out all the Namenode code that we didn't need. This was pretty much the code related to journaling, checkpointing, the secondary Namenode and so forth.

Next, we identified the key data structures we needed to move to the DB. We picked the two most memory intensive data-structures to migrate first: the Inodes, and the Blocks.

Since we were heavily time constrained (three months to deliver the project and the report), we decided to focus on functional correctness first, and then optimise later. So the easiest course of action seemed to be to modify the lowest levels of the call chain, replacing reads/writes from/to memory with query, insert, update and delete operations on the DB. We developed two helper classes, one each for Inodes and Blocks, and interfaced with the DB through these methods. We used the ClusterJ connector to talk to MySQL. This obviously meant that we needed a flat row representation for Inodes and Blocks in the DB, and we had some other problems to think of as well on the way. How do we index Inodes? How do we index Blocks? What about Triplets?

All in all, we tackled the problem of scaling the Namenode with a set of design decisions which we later found to be consistent with Shvacko's update paper on the Namenode's scalability, except that he suggests using HBase as the DB.

Current status

- Multiple stateless Namenodes run merrily, which store Inode and Block related metadata in MySQL Cluster. As a validation test, Clients can do an "ls" query to any Namenode and see a consistent view of the filesystem regardless of which Namenode updated the DB with the content.

- We're trying to ensure functional correctness using the HDFS unit tests. We got the most important ones to pass, and decided to keep some more bug fixing until later because we needed to evaluate the system as part of the course.

- We've been evaluating the system using the Synthetic Load Generator. Horizontal scalability has been clearly achieved; adding more Namenodes improves the average number of operations per second for different work loads. With write intensive work loads, the scalability is linear in terms of total operations/sec that are executed.

Current limitations

Obviously, our work isn't rainbows and sunshine; there's a long way to go. Here's what we don't have yet and are currently addressing:

- Performance improvements. With a single load-generator thread throwing requests at the Namenode, we're within a 10th of the original Namenode's performance because read/writes from/to memory now go over a network to a database cluster (which is OK, I guess). But with more LoadGen threads, we're experiencing a hefty bottleneck, which I'll describe in the next point.

- The Namenode isn't fully stateless yet. The most important data structures we're yet to move are the DatanodeDescriptor entities and the file leases. There'll surely be more, but these are the most crucial ones. Once full statelessness is achieved, we can eliminate the read-write locks in the code which are absolutely not needed any more in our implementation (the Namenode currently uses a multiple-reader-single-writer concurrency model). Profiling experiments indicated that the Namenode spends around 70% of its time waiting to acquire write locks. If we keep the Namenode fully stateless, we can wrap FSNamesystem operations into Database transactions which can be batched, and let MySQL cluster handle serialisability for us (which can handle write-heavy transactions really well). We can even break away from the single-writer model that the Namenode currently uses. Will this lead to a higher throughput for write operations than the original Namenode? Maybe. :)

- Clients and Datanodes have to be statically partitioned as of now (it sufficed for our evaluations). We need a way for them to pick a random Namenode to perform their operations with.

Talk is cheap, show me the code!

The code is publicly available here for thy scrutiny. You'll also need to have a MySQL cluster setup in order to test this (we have a hard coded set of defaults in DBConnector.java which you can politely ignore). :) Here's our presentation on it as well. We've dubbed our prototype implementation KTHFS (because we're students at KTH, but yes, no points for creativity on that one).

Future work

As an academic obligation, here's future work (and no I'm not going to write stuff we'll never do).

One member (not me) from the team will be continuing this work as part of his Masters thesis, and plans to address the above mentioned limitations as part of his work. I'll try to contribute as well during my free time (what are weekends for anyway?). Let's see how this goes. :)

-

Let's make metros more interesting

After moving to Europe for my masters, I've been accustomed to finding my way around cities using the subway trains. They're convenient, relatively cheap (unless you're in Stockholm, where using the word cheap throws an exception), and usually fast enough as well. The only problem I have with metros is that they're boring as shit to travel in, especially when you're alone. Sure you can carry a book with you or invest in a tab/pad/slate/smartphone/whatever, but we definitely need to do something about the view from the windows.Given that there's hardly anything interesting about rocks and the occasional flash of light in a metro tunnel, I think there's a lot that can be done to make the metro experience better. Here's one idea I have to solve this international crisis which is oh-so-more-important than anything else on this planet. I call it, metro cartoons (the animated ones).

The basic idea is to insert cartoon panels between the tunnel walls and the tracks. Since we know the approximate speed at which the trains move, you can estimate the minimum spacing between the panels that would be required to provide a reasonable frame rate required for the animation. I'm sure artists understand these aspects better (thus, I don't), and can come up with the right kind of drawings for this setting.

What say? I'm pretty sure someone must have tried this already, so let me know if you've heard of any such attempt.